HRNet阅读笔记及代码理解

摘要:

大多数现有方法从由高到低分辨率网络产生的低分辨率表示中恢复高分辨率表示。相反,本文在整个过程中保持高分辨率的表示。我们将高分辨率子网开始作为第一阶段,逐步添加高到低分辨率子网以形成更多阶段,并行连接多个子网,每个子网具有不同的分辨率。我们进行重复的多尺度融合,使得高到低分辨率表示可以重复从其他分辨率的表示获取信息,从而导致丰富的高分辨率表示。因此,预测的关键点热图可能更准确,空间更精确。

1. 简介

1.1 现有方法

- (a) 对称结构,先下采样,再上采样,同时使用跳层连接恢复下采样丢失的信息;

- (b) 级联金字塔;

- © 先下采样,转置卷积上采样,不使用跳层连接进行数据融合;

- (d) 扩张卷积,减少下采样次数,不使用跳层连接进行数据融合;

1.2 HRNet

简要描述:

HighResolution Net(HRNet),它能够在整个过程中保持高分辨率表示。以高分辨率子网开始作为第一阶段,逐个添加高到低分辨率子网以形成更多阶段,并且并行连接多分辨率子网。在整个过程中反复交换并行多分辨率子网络中的信息来进行重复的多尺度融合。

优点:

- (a)并行连接高低分辨率子网,而不是像大多数现有解决方案那样串联连接。因此,我们的方法能够保持高分辨率而不是通过从低到高的过程恢复分辨率,因此预测的热图可能在空间上更精确

- (b)大多数现有的融合方案汇总了低级别和高级别的表示。相反,我们在相同深度和相似水平的低分辨率表示的帮助下执行重复的多尺度融合以提升高分辨率表示,反之亦然,导致高分辨率表示对于姿势估计也是丰富的。因此,我们预测的热图可能更准确。个人感觉增加多尺度信息之间的融合是正确的,例如原图像和模糊图像进行联合双边滤波可以得到介于两者之间的模糊程度的图像,而RGF滤波就是重复将联合双边滤波的结果作为那张模糊的引导图,这样得到的结果会越来越趋近于原图。此处同样的道理,不同分辨率的图像采样到相同的尺度反复的融合,加之网络的学习能力,会使得多次融合后的结果更加趋近于正确的表示。

2. 方法描述

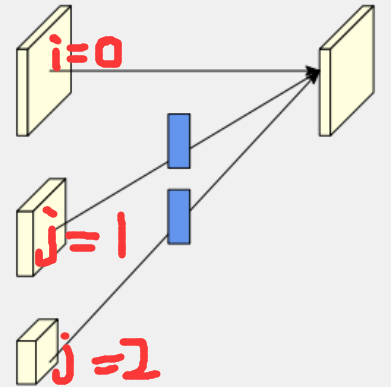

2.1 并行高分辨率子网

2.2 重复多尺度融合

3. 实验部分

3.1 消融研究

3.1.1 重复多尺度融合

- (a) W / o中间交换单元(1个融合):除最后一个交换单元外,多分辨率子网之间没有交换;

- (b) 仅W /跨阶段交换单元(3个融合):每个阶段内并行子网之间没有交换;

- © W /跨阶段和阶段内交换单元(共8个融合):这是我们提出的方法;

3.1.2 分辨率保持

所有四个高到低分辨率子网都在开头添加,深度相同,融合方案与我们的相同。该变体实现了72.5的AP,低于我们的小型网HRNet-W32的73.4 AP。我们认为原因是从低分辨率子网上的早期阶段提取的低级功能不太有用。此外,没有低分辨率并行子网的类似参数和计算复杂度的简单高分辨率网络表现出低得多的性能。

3.1.3 分辨率表示质量

检查从每个分辨率的特征图估计的热图的质量。

4. 代码学习(源码地址)

4.1 ResNet模块

虽然很熟悉了,但是还是介绍一下resnet网络的基本模块。如下的左图对应于resnet-18/34使用的基本块,右图是50/101/152所使用的,由于他们都比较深,所以有图相比于左图使用了1x1卷积来降维。

- (a)

conv3x3: 没啥好解释的,将原有的pytorch函数固定卷积和尺寸为3重新封装了一次; - (b)

BasicBlock: 搭建上图左边的模块。

- (1) 每个卷积块后面连接BN层进行归一化;

- (2) 残差连接前的3x3卷积之后只接入BN,不使用ReLU,避免加和之后的特征皆为正,保持特征的多样;

- (3) 跳层连接:两种情况,当模块输入和残差支路(3x3->3x3)的通道数一致时,直接相加;当两者通道不一致时(一般发生在分辨率降低之后,同分辨率一般通道数一致),需要对模块输入特征使用1x1卷积进行升/降维(步长为2,上面说了分辨率会降低),之后同样接BN,不用ReLU。

- ©

Bottleneck: 搭建上图右边的模块。

- (1) 使用1x1卷积先降维,再使用3x3卷积进行特征提取,最后再使用1x1卷积把维度升回去;

- (2) 每个卷积块后面连接BN层进行归一化;

- (2) 残差连接前的1x1卷积之后只接入BN,不使用ReLU,避免加和之后的特征皆为正,保持特征的多样性。

- (3) 跳层连接:两种情况,当模块输入和残差支路(1x1->3x3->1x1)的通道数一致时,直接相加;当两者通道不一致时(一般发生在分辨率降低之后,同分辨率一般通道数一致),需要对模块输入特征使用1x1卷积进行升/降维(步长为2,上面说了分辨率会降低),之后同样接BN,不用ReLU。

def conv3x3(in_planes, out_planes, stride=1):"""3x3 convolution with padding"""return nn.Conv2d(in_planes, out_planes, kernel_size=3, stride=stride,padding=1, bias=False)class BasicBlock(nn.Module):expansion = 1def __init__(self, inplanes, planes, stride=1, downsample=None):super(BasicBlock, self).__init__()self.conv1 = conv3x3(inplanes, planes, stride)self.bn1 = nn.BatchNorm2d(planes, momentum=BN_MOMENTUM)self.relu = nn.ReLU(inplace=True)self.conv2 = conv3x3(planes, planes)self.bn2 = nn.BatchNorm2d(planes, momentum=BN_MOMENTUM)self.downsample = downsampleself.stride = stridedef forward(self, x):residual = xout = self.conv1(x)out = self.bn1(out)out = self.relu(out)out = self.conv2(out)out = self.bn2(out)if self.downsample is not None:residual = self.downsample(x)out += residualout = self.relu(out)return outclass Bottleneck(nn.Module):expansion = 4def __init__(self, inplanes, planes, stride=1, downsample=None):super(Bottleneck, self).__init__()self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, bias=False)self.bn1 = nn.BatchNorm2d(planes, momentum=BN_MOMENTUM)self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=stride,padding=1, bias=False)self.bn2 = nn.BatchNorm2d(planes, momentum=BN_MOMENTUM)self.conv3 = nn.Conv2d(planes, planes * self.expansion, kernel_size=1,bias=False)self.bn3 = nn.BatchNorm2d(planes * self.expansion,momentum=BN_MOMENTUM)self.relu = nn.ReLU(inplace=True)self.downsample = downsampleself.stride = stridedef forward(self, x):residual = xout = self.conv1(x)out = self.bn1(out)out = self.relu(out)out = self.conv2(out)out = self.bn2(out)out = self.relu(out)out = self.conv3(out)out = self.bn3(out)if self.downsample is not None:residual = self.downsample(x)out += residualout = self.relu(out)return out

4.2 HighResolutionModule (高分辨率模块)

当仅包含一个分支时,生成该分支,没有融合模块,直接返回;当包含不仅一个分支时,先将对应分支的输入特征输入到对应分支,得到对应分支的输出特征;紧接着执行融合模块。

- (a)

_check_branches: 判断num_branches (int)和num_blocks, num_inchannels, num_channels (list)三者的长度是否一致,否则报错; - (b)

_make_one_branch: 搭建一个分支,单个分支内部分辨率相等,一个分支由num_blocks[branch_index]个block组成,block可以是两种ResNet模块中的一种;

- (1) 首先判断是否降维或者输入输出的通道(

num_inchannels[branch_index]和 num_channels[branch_index] * block.expansion(通道扩张率))是否一致,不一致使用1z1卷积进行维度升/降,后接BN,不使用ReLU;- (2) 顺序搭建

num_blocks[branch_index]个block,第一个block需要考虑是否降维的情况,所以单独拿出来,后面1 到 num_blocks[branch_index]个block完全一致,使用循环搭建就行。此时注意在执行完第一个block后将num_inchannels[branch_index重新赋值为num_channels[branch_index] * block.expansion。

- ©

_make_branches: 循环调用_make_one_branch函数创建多个分支; - (d)

_make_fuse_layers:

(1) 如果分支数等于1,返回None,说明此事不需要使用融合模块;

(2) 双层循环:

for i in range(num_branches if self.multi_scale_output else 1):的作用是,如果需要产生多分辨率的结果,就双层循环num_branches次,如果只需要产生最高分辨率的表示,就将i确定为0。

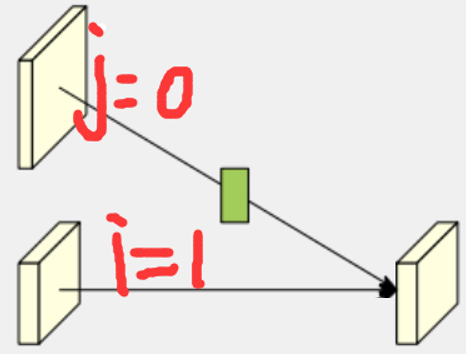

(2.1) 如果

j > i,此时的目标是将所有分支上采样到和i分支相同的分辨率并融合,也就是说j所代表的分支分辨率比i分支低,2**(j-i)表示j分支上采样这么多倍才能和i分支分辨率相同。先使用1x1卷积将j分支的通道数变得和i分支一致,进而跟着BN,然后依据上采样因子将j分支分辨率上采样到和i分支分辨率相同,此处使用最近邻插值;

(2.2) 如果

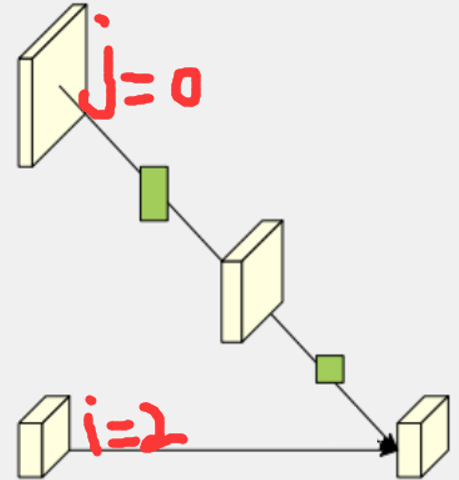

j = i,也就是说自身与自身之间不需要融合,nothing to do;(2.3) 如果

j < i,转换角色,此时最终目标是将所有分支采样到和i分支相同的分辨率并融合,注意,此时j所代表的分支分辨率比i分支高,正好和(2.1)相反。此时再次内嵌了一个循环,这层循环的作用是当i-j > 1时,也就是说两个分支的分辨率差了不止二倍,此时还是两倍两倍往上采样,例如i-j = 2时,j分支的分辨率比i分支大4倍,就需要上采样两次,循环次数就是2;

- (2.3.1) 当

k == i - j - 1时,举个例子,i = 2,j = 1, 此时仅循环一次,并采用当前模块,此时直接将j分支使用3x3的步长为2的卷积下采样(不使用bias),后接BN,不使用ReLU;

- (2.3.2) 当

k != i - j - 1时,举个例子,i = 3,j = 1, 此时循环两次,先采用当前模块,将j分支使用3x3的步长为2的卷积下采样(不使用bias)两倍,后接BN和ReLU,紧跟着再使用(2.3.1)中的模块,这是为了保证最后一次二倍下采样的卷积操作不使用ReLU,猜测也是为了保证融合后特征的多样性;

- (e)

forward: 前向传播函数,利用以上函数的功能搭建一个HighResolutionModule;

(1) 当仅包含一个分支时,生成该分支,没有融合模块,直接返回;

(2) 当包含不仅一个分支时,先将对应分支的输入特征输入到对应分支,得到对应分支的输出特征;紧接着执行融合模块;

- (2.1) 循环将对应分支的输入特征输入到对应分支模型中,得到对应分支的输出特征;

- (2.2) 融合模块:对着这张图看,很容易看懂。每次多尺度之间的加法运算都是从最上面的尺度开始往下加,所以

y = x[0] if i == 0 else self.fuse_layers[i][0](x[0]);加到他自己的时候,不需要经过融合函数的处理,直接加,所以if i == j: y = y + x[j];遇到不是最上面的尺度那个特征图或者它本身相同分辨率的那个特征图时,需要经过融合函数处理再加,所以y = y + self.fuse_layers[i][j](x[j])。最后将ReLU激活后的融合(加法)特征append到x_fuse,x_fuse的长度等于1(单尺度输出)或者num_branches(多尺度输出)。

class HighResolutionModule(nn.Module):def __init__(self, num_branches, blocks, num_blocks, num_inchannels,num_channels, fuse_method, multi_scale_output=True):super(HighResolutionModule, self).__init__()self._check_branches(num_branches, blocks, num_blocks, num_inchannels, num_channels)self.num_inchannels = num_inchannelsself.fuse_method = fuse_methodself.num_branches = num_branchesself.multi_scale_output = multi_scale_outputself.branches = self._make_branches(num_branches, blocks, num_blocks, num_channels)self.fuse_layers = self._make_fuse_layers()self.relu = nn.ReLU(True)def _check_branches(self, num_branches, blocks, num_blocks,num_inchannels, num_channels):if num_branches != len(num_blocks):error_msg = 'NUM_BRANCHES({}) <> NUM_BLOCKS({})'.format(num_branches, len(num_blocks))logger.error(error_msg)raise ValueError(error_msg)if num_branches != len(num_channels):error_msg = 'NUM_BRANCHES({}) <> NUM_CHANNELS({})'.format(num_branches, len(num_channels))logger.error(error_msg)raise ValueError(error_msg)if num_branches != len(num_inchannels):error_msg = 'NUM_BRANCHES({}) <> NUM_INCHANNELS({})'.format(num_branches, len(num_inchannels))logger.error(error_msg)raise ValueError(error_msg)def _make_one_branch(self, branch_index, block, num_blocks, num_channels,stride=1):# ---------------------------(1) begin---------------------------- #downsample = Noneif stride != 1 or \self.num_inchannels[branch_index] != num_channels[branch_index] * block.expansion:downsample = nn.Sequential(nn.Conv2d(self.num_inchannels[branch_index],num_channels[branch_index] * block.expansion,kernel_size=1, stride=stride, bias=False),nn.BatchNorm2d(num_channels[branch_index] * block.expansion,momentum=BN_MOMENTUM),)# ---------------------------(1) end---------------------------- ## ---------------------------(2) begin---------------------------- #layers = []layers.append(block(self.num_inchannels[branch_index],num_channels[branch_index],stride,downsample))# ---------------------------(2) middle---------------------------- #self.num_inchannels[branch_index] = num_channels[branch_index] * block.expansionfor i in range(1, num_blocks[branch_index]):layers.append(block(self.num_inchannels[branch_index],num_channels[branch_index]))# ---------------------------(2) end---------------------------- #return nn.Sequential(*layers)def _make_branches(self, num_branches, block, num_blocks, num_channels):branches = []for i in range(num_branches):branches.append(self._make_one_branch(i, block, num_blocks, num_channels))return nn.ModuleList(branches)def _make_fuse_layers(self):# ---------------------------(1) begin---------------------------- #if self.num_branches == 1:return None# ---------------------------(1) end---------------------------- #num_branches = self.num_branchesnum_inchannels = self.num_inchannels# ---------------------------(2) begin---------------------------- #fuse_layers = []for i in range(num_branches if self.multi_scale_output else 1):fuse_layer = []for j in range(num_branches):# ---------------------------(2.1) begin---------------------------- #if j > i:fuse_layer.append(nn.Sequential(nn.Conv2d(num_inchannels[j],num_inchannels[i],1, 1, 0, bias=False),nn.BatchNorm2d(num_inchannels[i]),nn.Upsample(scale_factor=2**(j-i), mode='nearest')))# ---------------------------(2.1) end---------------------------- ## ---------------------------(2.2) begin---------------------------- #elif j == i:fuse_layer.append(None)# ---------------------------(2.2) end---------------------------- ## ---------------------------(2.3) begin---------------------------- #else:conv3x3s = []for k in range(i-j):# ---------------------------(2.3.1) begin---------------------------- #if k == i - j - 1:num_outchannels_conv3x3 = num_inchannels[i]conv3x3s.append(nn.Sequential(nn.Conv2d(num_inchannels[j],num_outchannels_conv3x3,3, 2, 1, bias=False),nn.BatchNorm2d(num_outchannels_conv3x3)))# ---------------------------(2.3.1) end---------------------------- ## ---------------------------(2.3.1) begin---------------------------- #else:num_outchannels_conv3x3 = num_inchannels[j]conv3x3s.append(nn.Sequential(nn.Conv2d(num_inchannels[j],num_outchannels_conv3x3,3, 2, 1, bias=False),nn.BatchNorm2d(num_outchannels_conv3x3),nn.ReLU(True)))# ---------------------------(2.3.1) end---------------------------- ## ---------------------------(2.3) end---------------------------- #fuse_layer.append(nn.Sequential(*conv3x3s))fuse_layers.append(nn.ModuleList(fuse_layer))# ---------------------------(2) end---------------------------- #return nn.ModuleList(fuse_layers)def get_num_inchannels(self):return self.num_inchannelsdef forward(self, x):# ---------------------------(1) begin---------------------------- #if self.num_branches == 1:return [self.branches[0](x[0])]# ---------------------------(1) end---------------------------- ## ---------------------------(2) begin---------------------------- ## ---------------------------(2.1) begin---------------------------- #for i in range(self.num_branches):x[i] = self.branches[i](x[i])# ---------------------------(2.1) end---------------------------- ## ---------------------------(2.2) begin---------------------------- #x_fuse = []for i in range(len(self.fuse_layers)):y = x[0] if i == 0 else self.fuse_layers[i][0](x[0])for j in range(1, self.num_branches):if i == j:y = y + x[j]else:y = y + self.fuse_layers[i][j](x[j])x_fuse.append(self.relu(y))# ---------------------------(2.2) end---------------------------- ## ---------------------------(2) end---------------------------- #return x_fuse

4.3 PoseHighResolutionNet (模型整体结构)

该模块主要包含5个阶段,第一阶段由stem模块构成,第2、3、4阶段由transition模块,HighResolutionModule模块构成,最后一个阶段就是final_layer,其中第一阶段包含两次下采样,剩余阶段的最终结果没有下采样,所以产生的热图为原始输入图像分辨率的1/4。第2、3、4阶段的主要超参数有:

POSE_HIGH_RESOLUTION_NET.STAGE2.NUM_MODULES = 1 # 当前阶段HighResolutionModule模块的数目

POSE_HIGH_RESOLUTION_NET.STAGE2.NUM_BRANCHES = 2 # 当前阶段分支数目

POSE_HIGH_RESOLUTION_NET.STAGE2.NUM_BLOCKS = [4, 4] # 当前阶段每个分支上使用BLOCK块结构的数目

POSE_HIGH_RESOLUTION_NET.STAGE2.NUM_CHANNELS = [32, 64] # 当前阶段每个分支的通道数(*=expansion)

POSE_HIGH_RESOLUTION_NET.STAGE2.BLOCK = 'BASIC' # 使用4.1定义的块结构的哪一种

POSE_HIGH_RESOLUTION_NET.STAGE2.FUSE_METHOD = 'SUM' # 融合方式,求和还是串联POSE_HIGH_RESOLUTION_NET.STAGE3.NUM_MODULES = 1

POSE_HIGH_RESOLUTION_NET.STAGE3.NUM_BRANCHES = 3

POSE_HIGH_RESOLUTION_NET.STAGE3.NUM_BLOCKS = [4, 4, 4]

POSE_HIGH_RESOLUTION_NET.STAGE3.NUM_CHANNELS = [32, 64, 128]

POSE_HIGH_RESOLUTION_NET.STAGE3.BLOCK = 'BASIC'

POSE_HIGH_RESOLUTION_NET.STAGE3.FUSE_METHOD = 'SUM'POSE_HIGH_RESOLUTION_NET.STAGE4.NUM_MODULES = 1

POSE_HIGH_RESOLUTION_NET.STAGE4.NUM_BRANCHES = 4

POSE_HIGH_RESOLUTION_NET.STAGE4.NUM_BLOCKS = [4, 4, 4, 4]

POSE_HIGH_RESOLUTION_NET.STAGE4.NUM_CHANNELS = [32, 64, 128, 256]

POSE_HIGH_RESOLUTION_NET.STAGE4.BLOCK = 'BASIC'

POSE_HIGH_RESOLUTION_NET.STAGE4.FUSE_METHOD = 'SUM'- (a)

__init__&forward: 这两个模块一般都是对应的,所以放在一块;

- (1)

stem:|卷积(步长2)-> BN -> 卷积(步长2)-> BN -> ReLU -> Bottleneck x 4 (H/4, W/4, 256);- (2)

stage2:transition+HighResolutionModuleBRANCHES = 2,BASIC: [32, 64],[4, 4];- (3)

stage3:transition+HighResolutionModuleBRANCHES = 3,BASIC: [32, 64, 128],[4, 4, 4];- (4)

stage4:transition+HighResolutionModuleBRANCHES = 4,BASIC: [32, 64, 128, 256],[4, 4, 4, 4];- (5)

final_layer: 卷积,no BN, no ReLU;

- (b)

_make_layer: 其在stem模块中调用,使用BasicBlock或者Bottleneck作为基本构造块,循环搭建,第一个块结构单独搭建,不包含在循环内部,主要是因为如果需要进行通道维度变换**(64->256),只需要使用第一个块结构来执行,剩余1到blocks的块循环搭建,这些块结构输入输出通道都一致(=256)**; - ©

_make_transition_layer: 从前一个stage的输出得到当前stage的不同分支,也就是不同分辨率的特征图。举例1:从stage1到stage2,上一阶段输出特征图为单个分辨率**(H/4, W/4, 256),stage2需要两个分辨率的分支,_make_transition_layer就需要产生(H/4, W/4)和(H/8, W/8)**两个特征图;

- (1) 以当前

stage要产生的最终分支数为最外层循环,如果stage1存在**(H/4, W/4)的特征图,就判断该特征图的通道和所需要的通道数是否一致,一致的话,直接拿过来用,不一致的话使用3x3卷积做维度变换(不解:为什么使用了3x3卷积,但是没有进行padding?),后接BN和ReLU。此时stage1不存在(H/8, W/8)**的特征图,就需要降采样来产生3x3卷积(步长2),后接BN和ReLU,每次降采样都将前一阶段最后一个分支(分辨率最小)的特征图作为输入;

- (d)

_make_stage: 依据参数文件定义的每个stage的NUM_MODULES(都设定为1),调用HighResolutionModule模块进行多分支构建和分支之间的融合;此处代码中备注multi_scale_output is only used last module,只有当multi_scale_output = True并且是该阶段的最后一个模块时,才会设定reset_multi_scale_output = True,依据__init__函数中的设置,也就是说只有stage2、3中的那个HighResolutionModule模块是多尺度输出,stage4那个是单尺度输出; - x

init_weights: 暂时还没看

blocks_dict = {'BASIC': BasicBlock,'BOTTLENECK': Bottleneck

}class PoseHighResolutionNet(nn.Module):def __init__(self, cfg, **kwargs):self.inplanes = 64extra = cfg.MODEL.EXTRAsuper(PoseHighResolutionNet, self).__init__()# ---------------------------(1) begin---------------------------- ## stem netself.conv1 = nn.Conv2d(3, 64, kernel_size=3, stride=2, padding=1,bias=False)self.bn1 = nn.BatchNorm2d(64, momentum=BN_MOMENTUM)self.conv2 = nn.Conv2d(64, 64, kernel_size=3, stride=2, padding=1,bias=False)self.bn2 = nn.BatchNorm2d(64, momentum=BN_MOMENTUM)self.relu = nn.ReLU(inplace=True)self.layer1 = self._make_layer(Bottleneck, 64, 4)# ---------------------------(1) end---------------------------- ## ---------------------------(2) begin---------------------------- #self.stage2_cfg = cfg['MODEL']['EXTRA']['STAGE2']num_channels = self.stage2_cfg['NUM_CHANNELS']block = blocks_dict[self.stage2_cfg['BLOCK']]num_channels = [num_channels[i] * block.expansion for i in range(len(num_channels))]self.transition1 = self._make_transition_layer([256], num_channels)self.stage2, pre_stage_channels = self._make_stage(self.stage2_cfg, num_channels)# ---------------------------(2) end---------------------------- ## ---------------------------(3) begin---------------------------- #self.stage3_cfg = cfg['MODEL']['EXTRA']['STAGE3']num_channels = self.stage3_cfg['NUM_CHANNELS']block = blocks_dict[self.stage3_cfg['BLOCK']]num_channels = [num_channels[i] * block.expansion for i in range(len(num_channels))]self.transition2 = self._make_transition_layer(pre_stage_channels, num_channels)self.stage3, pre_stage_channels = self._make_stage(self.stage3_cfg, num_channels)# ---------------------------(3) end---------------------------- ## ---------------------------(4) begin---------------------------- #self.stage4_cfg = cfg['MODEL']['EXTRA']['STAGE4']num_channels = self.stage4_cfg['NUM_CHANNELS']block = blocks_dict[self.stage4_cfg['BLOCK']]num_channels = [num_channels[i] * block.expansion for i in range(len(num_channels))]self.transition3 = self._make_transition_layer(pre_stage_channels, num_channels)self.stage4, pre_stage_channels = self._make_stage(self.stage4_cfg, num_channels, multi_scale_output=False)# ---------------------------(4) end---------------------------- ## ---------------------------(5) begin---------------------------- #self.final_layer = nn.Conv2d(in_channels=pre_stage_channels[0],out_channels=cfg.MODEL.NUM_JOINTS,kernel_size=extra.FINAL_CONV_KERNEL,stride=1,padding=1 if extra.FINAL_CONV_KERNEL == 3 else 0)# ---------------------------(5) end---------------------------- #self.pretrained_layers = cfg['MODEL']['EXTRA']['PRETRAINED_LAYERS']def _make_transition_layer(self, num_channels_pre_layer, num_channels_cur_layer):num_branches_cur = len(num_channels_cur_layer)num_branches_pre = len(num_channels_pre_layer)# ---------------------------(1) begin---------------------------- #transition_layers = []for i in range(num_branches_cur):if i < num_branches_pre:if num_channels_cur_layer[i] != num_channels_pre_layer[i]:transition_layers.append(nn.Sequential(nn.Conv2d(num_channels_pre_layer[i],num_channels_cur_layer[i],3, 1, 1, bias=False),nn.BatchNorm2d(num_channels_cur_layer[i]),nn.ReLU(inplace=True)))else:transition_layers.append(None)else:conv3x3s = []for j in range(i+1-num_branches_pre):inchannels = num_channels_pre_layer[-1]outchannels = num_channels_cur_layer[i] \if j == i-num_branches_pre else inchannelsconv3x3s.append(nn.Sequential(nn.Conv2d(inchannels, outchannels, 3, 2, 1, bias=False),nn.BatchNorm2d(outchannels),nn.ReLU(inplace=True)))transition_layers.append(nn.Sequential(*conv3x3s))# ---------------------------(1) end---------------------------- #return nn.ModuleList(transition_layers)def _make_layer(self, block, planes, blocks, stride=1):downsample = Noneif stride != 1 or self.inplanes != planes * block.expansion:downsample = nn.Sequential(nn.Conv2d(self.inplanes, planes * block.expansion,kernel_size=1, stride=stride, bias=False),nn.BatchNorm2d(planes * block.expansion, momentum=BN_MOMENTUM),)layers = []layers.append(block(self.inplanes, planes, stride, downsample))self.inplanes = planes * block.expansionfor i in range(1, blocks):layers.append(block(self.inplanes, planes))return nn.Sequential(*layers)def _make_stage(self, layer_config, num_inchannels,multi_scale_output=True):num_modules = layer_config['NUM_MODULES']num_branches = layer_config['NUM_BRANCHES']num_blocks = layer_config['NUM_BLOCKS']num_channels = layer_config['NUM_CHANNELS']block = blocks_dict[layer_config['BLOCK']]fuse_method = layer_config['FUSE_METHOD']modules = []for i in range(num_modules):# multi_scale_output is only used last moduleif not multi_scale_output and i == num_modules - 1:reset_multi_scale_output = Falseelse:reset_multi_scale_output = Truemodules.append(HighResolutionModule(num_branches,block,num_blocks,num_inchannels,num_channels,fuse_method,reset_multi_scale_output))num_inchannels = modules[-1].get_num_inchannels()return nn.Sequential(*modules), num_inchannelsdef forward(self, x):# ---------------------------(1) begin---------------------------- #x = self.conv1(x)x = self.bn1(x)x = self.relu(x)x = self.conv2(x)x = self.bn2(x)x = self.relu(x)x = self.layer1(x)# ---------------------------(1) end---------------------------- ## ---------------------------(2) begin---------------------------- #x_list = []for i in range(self.stage2_cfg['NUM_BRANCHES']):if self.transition1[i] is not None:x_list.append(self.transition1[i](x))else:x_list.append(x)y_list = self.stage2(x_list)# ---------------------------(2) end---------------------------- ## ---------------------------(3) begin---------------------------- #x_list = []for i in range(self.stage3_cfg['NUM_BRANCHES']):if self.transition2[i] is not None:x_list.append(self.transition2[i](y_list[-1]))else:x_list.append(y_list[i])y_list = self.stage3(x_list)# ---------------------------(3) end---------------------------- ## ---------------------------(4) begin---------------------------- #x_list = []for i in range(self.stage4_cfg['NUM_BRANCHES']):if self.transition3[i] is not None:x_list.append(self.transition3[i](y_list[-1]))else:x_list.append(y_list[i])y_list = self.stage4(x_list)# ---------------------------(4) end---------------------------- ## ---------------------------(5) begin---------------------------- #x = self.final_layer(y_list[0])# ---------------------------(5) end---------------------------- #return xdef init_weights(self, pretrained=''):logger.info('=> init weights from normal distribution')for m in self.modules():if isinstance(m, nn.Conv2d):# nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')nn.init.normal_(m.weight, std=0.001)for name, _ in m.named_parameters():if name in ['bias']:nn.init.constant_(m.bias, 0)elif isinstance(m, nn.BatchNorm2d):nn.init.constant_(m.weight, 1)nn.init.constant_(m.bias, 0)elif isinstance(m, nn.ConvTranspose2d):nn.init.normal_(m.weight, std=0.001)for name, _ in m.named_parameters():if name in ['bias']:nn.init.constant_(m.bias, 0)if os.path.isfile(pretrained):pretrained_state_dict = torch.load(pretrained)logger.info('=> loading pretrained model {}'.format(pretrained))need_init_state_dict = {}for name, m in pretrained_state_dict.items():if name.split('.')[0] in self.pretrained_layers \or self.pretrained_layers[0] is '*':need_init_state_dict[name] = mself.load_state_dict(need_init_state_dict, strict=False)elif pretrained:logger.error('=> please download pre-trained models first!')raise ValueError('{} is not exist!'.format(pretrained))def get_pose_net(cfg, is_train, **kwargs):model = PoseHighResolutionNet(cfg, **kwargs)if is_train and cfg.MODEL.INIT_WEIGHTS:model.init_weights(cfg.MODEL.PRETRAINED)return model

HRNet阅读笔记及代码理解相关推荐

- 图解算法英文版资源,阅读笔记及代码(Python)

图解算法 作为一个建筑转码选手,这是我的第一本算法书,它让我认识到算法的有趣并开始了我的学习之旅.在这里我分享出这本书的电子版,我的阅读笔记(英文摘要版)以及书中的案例(Python).如果这些资源对 ...

- Learning to Adapt Invariance in Memory for Person Re-identification文章阅读解析及代码理解

Learning to Adapt Invariance in Memory for Person Re-identification文章阅读解析 Abstract INTRODUCTION THE ...

- HRNet论文笔记及代码详解

<Deep High-Resolution Representation Learning for Visual Recognition> 0. 前置知识 1)图像语义信息理解 2)特征金 ...

- Learning salient boundary feature for anchor-free temporal action localization AFSD阅读笔记及代码复现

论文地址:Lin_Learning_Salient_Boundary_Feature_for_Anchor-free_Temporal_Action_Localization_CVPR_2021_pa ...

- C++ Primer 3rd 英文版 阅读笔记+练习代码

chapter 1: 1.目前对ISO98标准的C++支持的较好的编译器有gcc和VC7.1(Visual Studio .NET 2003) 2.由于各平台和编译器的不同实现,导致了C++头文件的扩 ...

- 菜鸟笔记-DuReader阅读理解基线模型代码阅读笔记(八)—— 模型训练-训练

系列目录: 菜鸟笔记-DuReader阅读理解基线模型代码阅读笔记(一)--数据 菜鸟笔记-DuReader阅读理解基线模型代码阅读笔记(二)-- 介绍及分词 菜鸟笔记-DuReader阅读理解基线模 ...

- 菜鸟笔记-DuReader阅读理解基线模型代码阅读笔记(九)—— 预测与校验

系列目录: 菜鸟笔记-DuReader阅读理解基线模型代码阅读笔记(一)--数据 菜鸟笔记-DuReader阅读理解基线模型代码阅读笔记(二)-- 介绍及分词 菜鸟笔记-DuReader阅读理解基线模 ...

- [置顶] Linux协议栈代码阅读笔记(一)

Linux协议栈代码阅读笔记(一) (基于linux-2.6.21.7) (一)用户态通过诸如下面的C库函数访问协议栈服务 int socket(int domain, int type, int p ...

- Deep Learning论文笔记之(五)CNN卷积神经网络代码理解

Deep Learning论文笔记之(五)CNN卷积神经网络代码理解 zouxy09@qq.com http://blog.csdn.net/zouxy09 自己平时看了一些论文,但 ...

最新文章

- 我收到的最佳编程建议

- 人机智能既不是人类智能,也不是人工智能

- JavaScript四舍五入的改进

- linux版本更新,滚动更新与固定版本Linux之争

- ElementUI中el-time-picker实现选择时间并格式化显示和传参的格式

- c++的构造函数初始化列表

- 信用卡还不起会有什么严重后果?

- Cycle-1(循环)

- 对java的集合的理解_谈谈你对java集合类的理解

- IDEA颜色及主题配色方案记录,持续更新中。。。

- STM32F103 DMA方式GPIO输出

- yourshelf是什么意思中文_英语shelf的中文是什么意思

- 天猫精灵 python_天猫精灵控制esp8266点led灯

- 微软发布了个BT软件

- 4.8 单元格背景样式的设置 [原创Excel教程]

- 数字方法--按零补位

- radiogroup多选_为何多组RadioGroup 里面的RadioButton 会出现多选状态?

- Android 设置来电铃声、通知铃声、闹钟铃声中的坑

- Java读取hdfs文件权限问题

- 宝塔面板修改默认的放行端口8888为8001并且生效