多变量线性相关分析_如何测量多个变量之间的“非线性相关性”?

多变量线性相关分析

现实世界中的数据科学 (Data Science in the Real World)

This article aims to present two ways of calculating non linear correlation between any number of discrete variables. The objective for a data analysis project is twofold : on the one hand, to know the amount of information the variables share with each other, and therefore, to identify whether the data available contain the information one is looking for ; and on the other hand, to identify which minimum set of variables contains the most important amount of useful information.

本文旨在介绍两种计算任意数量的离散变量之间的非线性相关性的方法。 数据分析项目的目标是双重的:一方面,了解变量之间共享的信息量,从而确定可用数据是否包含人们正在寻找的信息; 另一方面,确定哪些最小变量集包含最重要的有用信息量。

变量之间的不同类型的关系 (The different types of relationships between variables)

线性度 (Linearity)

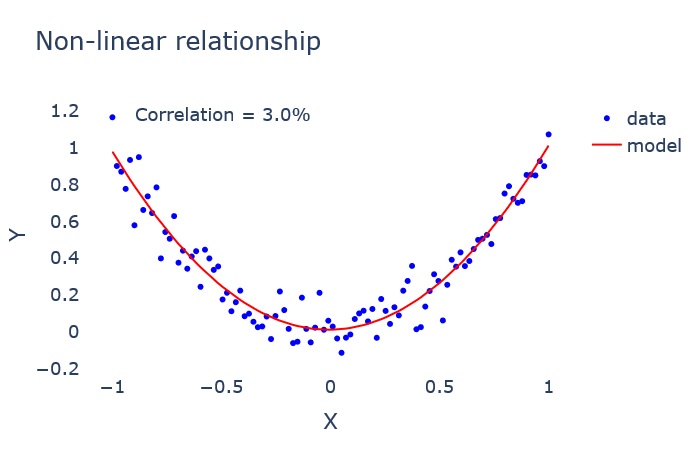

The best-known relationship between several variables is the linear one. This is the type of relationships that is measured by the classical correlation coefficient: the closer it is, in absolute value, to 1, the more the variables are linked by an exact linear relationship.

几个变量之间最著名的关系是线性关系。 这是用经典相关系数衡量的关系类型:绝对值越接近1,变量之间通过精确的线性关系链接的越多。

However, there are plenty of other potential relationships between variables, which cannot be captured by the measurement of conventional linear correlation.

但是,变量之间还有许多其他潜在的关系,无法通过常规线性相关性的测量来捕获。

To find such non-linear relationships between variables, other correlation measures should be used. The price to pay is to work only with discrete, or discretized, variables.

为了找到变量之间的这种非线性关系,应该使用其他相关度量。 要付出的代价是仅对离散变量或离散变量起作用。

In addition to that, having a method for calculating multivariate correlations makes it possible to take into account the two main types of interaction that variables may present: relationships of information redundancy or complementarity.

除此之外,拥有一种用于计算多元相关性的方法,可以考虑变量可能呈现的两种主要交互类型:信息冗余或互补性的关系。

冗余 (Redundancy)

When two variables (hereafter, X and Y) share information in a redundant manner, the amount of information provided by both variables X and Y to predict Z will be inferior to the sum of the amounts of information provided by X to predict Z, and by Y to predict Z.

当两个变量(以下,X和Y)以冗余的方式共享信息,由两个变量X和Y中提供的信息来预测的Z量将不如由X所提供的预测的Z信息的量的总和,和由Y预测Z。

In the extreme case, X = Y. Then, if the values taken by Z can be correctly predicted 50% of the times by X (and Y), the values taken by Z cannot be predicted perfectly (i.e. 100% of the times) by the variables X and Y together.

在极端情况下, X = Y。 然后,如果可以通过X (和Y )正确地预测Z所取的值的50%时间,则变量X和Y不能一起完美地预测Z所取的值(即100%的时间)。

╔═══╦═══╦═══╗ ║ X ║ Y ║ Z ║ ╠═══╬═══╬═══╣ ║ 0 ║ 0 ║ 0 ║ ║ 0 ║ 0 ║ 0 ║ ║ 1 ║ 1 ║ 0 ║ ║ 1 ║ 1 ║ 1 ║ ╚═══╩═══╩═══╝互补性 (Complementarity)

The complementarity relationship is the exact opposite situation. In the extreme case, X provides no information about Z, neither does Y, but the variables X and Y together allow to predict perfectly the values taken by Z. In such a case, the correlation between X and Z is zero, as is the correlation between Y and Z, but the correlation between X, Y and Z is 100%.

互补关系是完全相反的情况。 在极端情况下, X不提供有关Z的信息, Y也不提供任何信息,但是变量X和Y一起可以完美地预测Z所取的值。 在这种情况下, X和Z之间的相关性为零, Y和Z之间的相关性也为零,但是X , Y和Z之间的相关性为100%。

These complementarity relationships only occur in the case of non-linear relationships, and must then be taken into account in order to avoid any error when trying to reduce the dimensionality of a data analysis problem: discarding X and Y because they do not provide any information on Z when considered independently would be a bad idea.

这些互补关系仅在非线性关系的情况下发生,然后在尝试减小数据分析问题的维数时必须考虑到它们以避免错误:丢弃X和Y,因为它们不提供任何信息在Z上单独考虑时,将是一个坏主意。

╔═══╦═══╦═══╗ ║ X ║ Y ║ Z ║ ╠═══╬═══╬═══╣ ║ 0 ║ 0 ║ 0 ║ ║ 0 ║ 1 ║ 1 ║ ║ 1 ║ 0 ║ 1 ║ ║ 1 ║ 1 ║ 0 ║ ╚═══╩═══╩═══╝“多元非线性相关性”的两种可能测度 (Two possible measures of “multivariate non-linear correlation”)

There is a significant amount of possible measures of (multivariate) non-linear correlation (e.g. multivariate mutual information, maximum information coefficient — MIC, etc.). I present here two of them whose properties, in my opinion, satisfy exactly what one would expect from such measures. The only caveat is that they require discrete variables, and are very computationally intensive.

存在(多元)非线性相关性的大量可能度量(例如多元互信息,最大信息系数MIC等)。 我在这里介绍他们中的两个,我认为它们的性质完全满足人们对此类措施的期望。 唯一的警告是它们需要离散变量,并且计算量很大。

对称测度 (Symmetric measure)

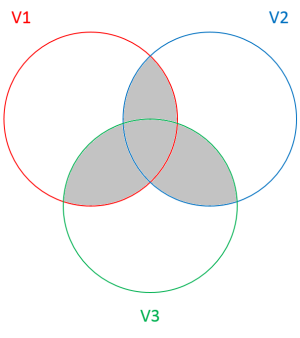

The first one is a measure of the information shared by n variables V1, …, Vn, known as “dual total correlation” (among other names).

第一个是对n个变量V1,…,Vn共享的信息的度量,称为“双重总相关”(在其他名称中)。

This measure of the information shared by different variables can be characterized as:

不同变量共享的信息的这种度量可以表征为:

where H(V) expresses the entropy of variable V.

其中H(V)表示变量V的熵。

When normalized by H(V1, …, Vn), this “mutual information score” takes values ranging from 0% (meaning that the n variables are not at all similar) to 100% (meaning that the n variables are identical, except for the labels).

当用H(V1,…,Vn)归一化时,该“互信息分”取值范围从0%(意味着n个变量根本不相似)到100%(意味着n个变量相同,除了标签)。

This measure is symmetric because the information shared by X and Y is exactly the same as the information shared by Y and X.

此度量是对称的,因为X和Y共享的信息与Y和X共享的信息完全相同。

The Venn diagram above shows the “variability” (entropy) of the variables V1, V2 and V3 with circles. The shaded area represents the entropy shared by the three variables: it is the dual total correlation.

上方的维恩图用圆圈显示变量V1 , V2和V3的“变异性”(熵)。 阴影区域表示三个变量共享的熵:它是对偶总相关。

不对称测度 (Asymmetric measure)

The symmetry property of usual correlation measurements is sometimes criticized. Indeed, if I want to predict Y as a function of X, I do not care if X and Y have little information in common: all I care about is that the variable X contains all the information needed to predict Y, even if Y gives very little information about X. For example, if X takes animal species and Y takes animal families as values, then X easily allows us to know Y, but Y gives little information about X:

常用的相关测量的对称性有时会受到批评。 的确,如果我想将Y预测为X的函数,则我不在乎X和Y是否有很少的共同点信息:我只关心变量X包含预测Y所需的所有信息,即使Y给出关于X的信息很少。 例如,如果X取动物种类而Y取动物种类作为值,则X容易使我们知道Y ,但Y几乎没有提供有关X的信息:

╔═════════════════════════════╦══════════════════════════════╗ ║ Animal species (variable X) ║ Animal families (variable Y) ║ ╠═════════════════════════════╬══════════════════════════════╣ ║ Tiger ║ Feline ║ ║ Lynx ║ Feline ║ ║ Serval ║ Feline ║ ║ Cat ║ Feline ║ ║ Jackal ║ Canid ║ ║ Dhole ║ Canid ║ ║ Wild dog ║ Canid ║ ║ Dog ║ Canid ║ ╚═════════════════════════════╩══════════════════════════════╝The “information score” of X to predict Y should then be 100%, while the “information score” of Y for predicting X will be, for example, only 10%.

那么,用于预测Y的X的“信息分数”应为100%,而用于预测X的Y的“信息分数”仅为例如10%。

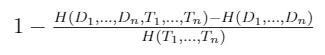

In plain terms, if the variables D1, …, Dn are descriptors, and the variables T1, …, Tn are target variables (to be predicted by descriptors), then such an information score is given by the following formula:

简而言之,如果变量D1,...,Dn是描述符,变量T1,...,Tn是目标变量(将由描述符预测),则这样的信息得分将由以下公式给出:

where H(V) expresses the entropy of variable V.

其中H(V)表示变量V的熵。

This “prediction score” also ranges from 0% (if the descriptors do not predict the target variables) to 100% (if the descriptors perfectly predict the target variables). This score is, to my knowledge, completely new.

此“预测分数”的范围也从0%(如果描述符未预测目标变量)到100%(如果描述符完美地预测目标变量)。 据我所知,这个分数是全新的。

The shaded area in the above diagram represents the entropy shared by the descriptors D1 and D2 with the target variable T1. The difference with the dual total correlation is that the information shared by the descriptors but not related to the target variable is not taken into account.

上图中的阴影区域表示描述符D1和D2与目标变量T1共享的熵。 与双重总相关的区别在于,不考虑描述符共享但与目标变量无关的信息。

实际中信息分数的计算 (Computation of the information scores in practice)

A direct method to calculate the two scores presented above is based on the estimation of the entropies of the different variables, or groups of variables.

计算上述两个分数的直接方法是基于对不同变量或变量组的熵的估计。

In R language, the entropy function of the ‘infotheo’ package gives us exactly what we need. The calculation of the joint entropy of three variables V1, V2 and V3 is very simple:

在R语言中,“ infotheo”程序包的熵函数提供了我们所需的信息。 三个变量V1 , V2和V3的联合熵的计算非常简单:

library(infotheo)df <- data.frame(V1 = c(0,0,1,1,0,0,1,0,1,1), V2 = c(0,1,0,1,0,1,1,0,1,0), V3 = c(0,1,1,0,0,0,1,1,0,1))entropy(df)[1] 1.886697The computation of the joint entropy of several variables in Python requires some additional work. The BIOLAB contributor, on the blog of the Orange software, suggests the following function:

Python中几个变量的联合熵的计算需要一些额外的工作。 BIOLAB贡献者在Orange软件的博客上建议了以下功能:

import numpy as npimport itertoolsfrom functools import reducedef entropy(*X): entropy = sum(-p * np.log(p) if p > 0 else 0 for p in (np.mean(reduce(np.logical_and, (predictions == c for predictions, c in zip(X, classes)))) for classes in itertools.product(*[set(x) for x in X]))) return(entropy)V1 = np.array([0,0,1,1,0,0,1,0,1,1])V2 = np.array([0,1,0,1,0,1,1,0,1,0])V3 = np.array([0,1,1,0,0,0,1,1,0,1])entropy(V1, V2, V3)1.8866967846580784In each case, the entropy is given in nats, the “natural unit of information”.

在每种情况下,熵都以nat(“信息的自然单位”)给出。

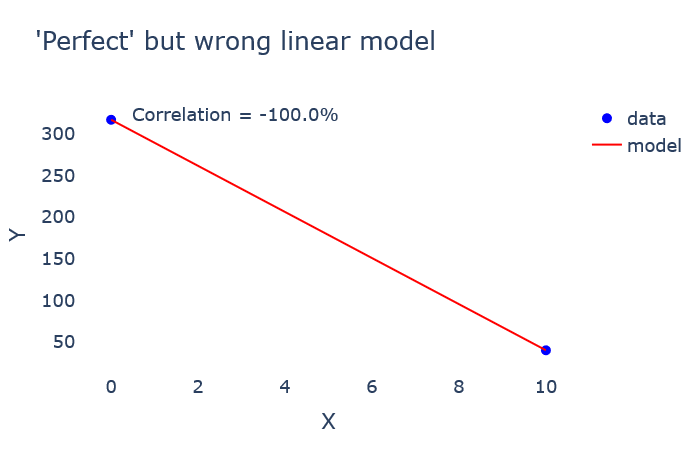

For a high number of dimensions, the information scores are no longer computable, as the entropy calculation is too computationally intensive and time-consuming. Also, it is not desirable to calculate information scores when the number of samples is not large enough compared to the number of dimensions, because then the information score is “overfitting” the data, just like in a classical machine learning model. For instance, if only two samples are available for two variables X and Y, the linear regression will obtain a “perfect” result:

对于大量维,信息分数不再可计算,因为熵计算的计算量很大且很耗时。 同样,当样本数量与维数相比不够大时,也不希望计算信息分数,因为就像经典的机器学习模型一样,信息分数会使数据“过度拟合”。 例如,如果对于两个变量X和Y只有两个样本可用,则线性回归将获得“完美”的结果:

╔════╦═════╗ ║ X ║ Y ║ ╠════╬═════╣ ║ 0 ║ 317 ║ ║ 10 ║ 40 ║ ╚════╩═════╝

Similarly, let’s imagine that I take temperature measures over time, while ensuring to note the time of day for each measure. I can then try to explore the relationship between time of day and temperature. If the number of samples I have is too small relative to the number of problem dimensions, the chances are high that the information scores overestimate the relationship between the two variables:

同样,让我们想象一下,我会随着时间的推移进行温度测量,同时确保记下每个测量的时间。 然后,我可以尝试探索一天中的时间与温度之间的关系。 如果我拥有的样本数量相对于问题维度的数量而言太少,则信息分数很有可能高估了两个变量之间的关系:

╔══════════════════╦════════════════╗ ║ Temperature (°C) ║ Hour (0 to 24) ║ ╠══════════════════╬════════════════╣ ║ 23 ║ 10 ║ ║ 27 ║ 15 ║ ╚══════════════════╩════════════════╝In the above example, and based on the only observations available, it appears that the two variables are in perfect bijection: the information scores will be 100%.

在上面的示例中,并且基于仅可用的观察结果,看来这两个变量完全是双射的:信息得分将为100%。

It should therefore be remembered that information scores are capable, like machine learning models, of “overfitting”, much more than linear correlation, since linear models are by nature limited in complexity.

因此,应该记住,信息评分像机器学习模型一样,具有“过拟合”的能力,远远超过了线性相关性,因为线性模型天生就受到复杂性的限制。

预测分数使用示例 (Example of prediction score use)

The Titanic dataset contains information about 887 passengers from the Titanic who were on board when the ship collided with an iceberg: the price they paid for boarding (Fare), their class (Pclass), their name (Name), their gender (Sex), their age (Age), the number of their relatives on board (Parents/Children Aboard and Siblings/Spouses Aboard) and whether they survived or not (Survived).

泰坦尼克号数据集包含有关当泰坦尼克号与冰山相撞时在船上的887名乘客的信息:他们所支付的登船价格( 车费 ),其舱位( Pclass ),姓名( Name ),性别( Sex ) ,他们的年龄( Age ),在船上的亲戚数( 父母/子女和兄弟姐妹/配偶 )以及他们是否幸存( Survived )。

This dataset is typically used to determine the probability that a person had of surviving, or more simply to “predict” whether the person survived, by means of the individual data available (excluding the Survived variable).

该数据集通常用于通过可用的个人数据(不包括生存变量)来确定一个人生存的可能性,或更简单地“预测”该人是否生存 。

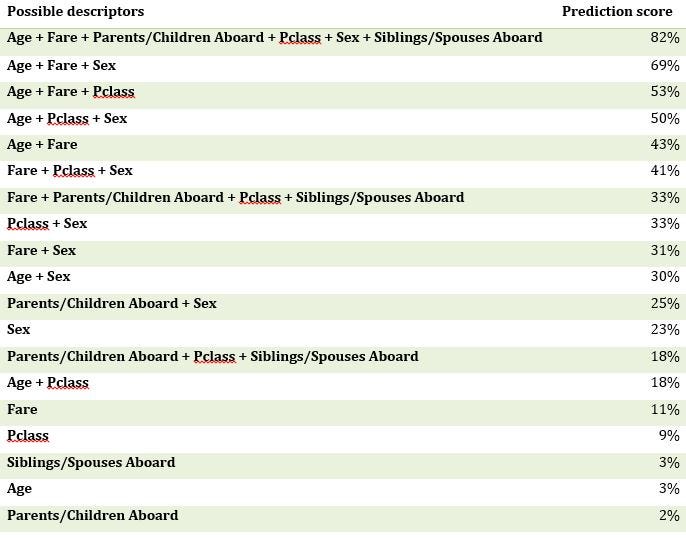

So, for different possible combinations of the descriptors, I calculated the prediction score with respect to the Survived variable. I removed the nominative data (otherwise the prediction score would be 100% because of the overfitting) and discretized the continuous variables. Some results are presented below:

因此,对于描述符的不同可能组合,我针对生存变量计算了预测得分。 我删除了名义数据(否则,由于过度拟合,预测得分将为100%),并离散化了连续变量。 一些结果如下所示:

The first row of the table gives the prediction score if we use all the predictors to predict the target variable: this score being more than 80%, it is clear that the available data enable us to predict with a “good precision” the target variable Survived.

如果我们使用所有预测变量来预测目标变量,则表的第一行将给出预测得分:该得分超过80%,很明显,可用数据使我们能够“精确”地预测目标变量幸存下来 。

Cases of information redundancy can also be observed: the variables Fare, PClass and Sex are together correlated at 41% with the Survived variable, while the sum of the individual correlations amounts to 43% (11% + 9% + 23%).

信息冗余的情况下,也可以观察到:变量票价 ,PClass和性别在与幸存变量41%一起相关,而各个相关性的总和达43%(11%+ 9%+ 23%)。

There are also cases of complementarity: the variables Age, Fare and Sex are almost 70% correlated with the Survived variable, while the sum of their individual correlations is not even 40% (3% + 11% + 23%).

还有互补的情况: 年龄 , 票价和性别变量与生存变量几乎有70%相关,而它们各自的相关总和甚至不到40%(3%+ 11%+ 23%)。

Finally, if one wishes to reduce the dimensionality of the problem and to find a “sufficiently good” model using as few variables as possible, it is better to use the three variables Age and Fare and Sex (prediction score of 69%) rather than the variables Fare, Parents/Children Aboard, Pclass and Siblings/Spouses Aboard (prediction score of 33%). It allows to find twice as much useful information with one less variable.

最后,如果希望减少问题的范围并使用尽可能少的变量来找到“足够好”的模型,则最好使用年龄 , 票价和性别这三个变量(预测得分为69%),而不是变量票价 , 家长 / 儿童 到齐 ,Pclass和兄弟姐妹 / 配偶 到齐 (33%预测得分)。 它允许查找变量少一倍的有用信息。

Calculating the prediction score can therefore be very useful in a data analysis project, to ensure that the data available contain sufficient relevant information, and to identify the variables that are most important for the analysis.

因此,在数据分析项目中,计算预测分数可能非常有用,以确保可用数据包含足够的相关信息,并确定对于分析最重要的变量。

翻译自: https://medium.com/@gdelongeaux/how-to-measure-the-non-linear-correlation-between-multiple-variables-804d896760b8

多变量线性相关分析

http://www.taodudu.cc/news/show-4969188.html

相关文章:

- 第四章 向量组的线性相关性

- 线性代数系列(五)--线性相关性

- 线性代数【7】 向量和线性相关性

- MVVM双向数据绑定原理

- React双向数据绑定原理---受控组件

- 理解VUE2双向数据绑定原理和实现

- Vue双向数据绑定原理(面试必问)

- Vue的双向数据绑定原理(极简版)

- vue的v-model双向数据绑定原理

- 请这样回答双向数据绑定原理

- SQL批量快速替换文章标题关键词的方法语法 快速批量替换某个词技巧

- Python爬虫1:批量获取电影标题和剧照

- 【SEO联盟】老域名历史批量查询软件

- 浏览器批量采集网站标题 保存Excel表格

- 批量测试链接地址是否正常访问

- python 批量查询网页导出结果_python导出网页数据到excel表格-如何使用python将大量数据导出到Excel中的小技巧...

- Python Mongodb 查询以及批量写、批量查

- HTTP 状态码查询大全

- Redis模糊查询及批量删除key

- 如何批量查询网站的搜狗收录情况?搜狗收录么查询

- 批量查询接口如何巧妙利用单查询接口中的@Cacheable

- 分布式快速批量获取网站标题关键字描述(TDK)接口api文档说明

- html获取网站标题,批量获取网站标题

- 用EXCEL批量获取网页标题的方法

- 用matlab水和水蒸汽热力性质,新的水和水蒸汽热力性质国际标准IAPWS—IF97及计算软件...

- 2021年制冷与空调设备运行操作新版试题及制冷与空调设备运行操作模拟试题

- matlab卡诺循环,制冷课后习题分解

- 933计算机大纲,2020年华南理工大学933化学综合考研大纲

- python科学计算试题及答案_高校邦Python科学计算章节答案

- 热力学总结

多变量线性相关分析_如何测量多个变量之间的“非线性相关性”?相关推荐

- 多变量线性优化_使用线性上下文强盗进行多变量Web优化

多变量线性优化 Expedia Group Technology -数据 (EXPEDIA GROUP TECHNOLOGY - DATA) Or how you can run full webpa ...

- 广义典型相关分析_重复测量数据分析及结果详解(之二)——广义估计方程

上一篇文章主要介绍了重复测量方差分析的基本思想是什么.它能做什么.怎么做.结果怎么解释,这几个问题.最后同时指出重复测量方差分析还是有一定局限,起码不够灵活.所以本文在上一篇文章基础上继续介绍医学重复 ...

- 如何用python进行相关性分析_使用 Python 查找分类变量和连续变量之间的相关性...

在表格数据集上创建任何机器学习模型之前, 通常我们会检查独立变量和目标变量之间是否存在关系.这可以通过测量两个变量之间的相关性来实现.在 python 中, pandas 提供了一个函数 datafr ...

- R语言广义加性模型GAMs:可视化每个变量的样条函数、样条函数与变量与目标变量之间的平滑曲线比较、并进行多变量的归一化比较、测试广义线性加性模型GAMs在测试集上的表现(防止过拟合)

R语言广义加性模型GAMs:可视化每个变量的样条函数.样条函数与变量与目标变量之间的平滑曲线比较.并进行多变量的归一化比较.测试广义线性加性模型GAMs在测试集上的表现(防止过拟合) 目录

- 机器学习 多变量回归算法_如何为机器学习监督算法识别正确的自变量?

机器学习 多变量回归算法 There is a very famous acronym GIGO in the field of computer science which I have learn ...

- Python 散点图线性拟合_机器学习之利用Python进行简单线性回归分析

前言:在利用机器学习方法进行数据分析时经常要了解变量的相关性,有时还需要对变量进行回归分析.本文首先对人工智能/机器学习/深度学习.相关分析/因果分析/回归分析等易混淆的概念进行区分,最后结合案例介绍 ...

- 典型相关分析_微生物多样研究—微生物深度分析概述

一.微生物深度分析方法核心思想 复杂微生物群落解构的核心思想: 不预设任何假定,客观地观测整个微生物组所发生的一系列结构性变化特征,最终识别出与疾病或所关注的表型相关的关键微生物物种.基因和代谢产物. ...

- 多选题spss相关分析_【医学问卷分析】使用SPSS多重响应对医学问卷多选题进行统计分析——【杏花开医学统计】...

杏花开生物医药统计 一号在手,统计无忧! 关 注 [医学问卷分析] 使用SPSS多重响应对 医学问卷多选题进行统计分析 关键词:SPSS.问卷分析 导 读 前几期,我们介绍了量表的制作及信效度分析的 ...

- C语言数据结构线性表上机实验报告,数据结构实验报告实验一线性表_图文

数据结构实验报告实验一线性表_图文 更新时间:2017/2/11 1:23:00 浏览量:763 手机版 数据结构实验报告 实验名称: 实验一 线性表 学生姓名: 班 级: 班内序号: 学 号: ...

最新文章

- Java面试题之Oracle 支持哪三种事务隔离级别

- php 析构不执行,PHP析构方法 __destruct() 不触发的两个解决办法

- MySQL高级查询语句

- shell 取中间行的第一列_shell脚本的使用该熟练起来了,你说呢?(篇三)

- C0301 代码块{}的使用,重定向, 从文件中读取行

- 电子学会Python(二至五级)

- tf.nn.conv2d理解(带通道的卷积图片输出案例)

- vue 悬浮按钮组件_如何搭建和发布一个 Vue 组件库

- Android笔记 notification

- 从angularJS看MVVM

- [转]“新欢乐时光”病毒源代码分析

- GIS中的矢量数据、栅格数据

- Java常用类详细讲解

- 游戏策划入门教程(前言)

- 当360屠榜黑客奥斯卡,我们为什么要关注国家级网络安全战?

- 基于Springboot的漫画之家管理系统

- 施耐德PLC初始IP地址计算

- android压缩照片到指定大小100%可靠

- php期末作业作业,作业作业作业作业作业作业

- 多因子选选股MATLAB代码,MatlabCode 多因子模型构建。多因子模型是量化选股中最重要的一类模型 联合开发网 - pudn.com...